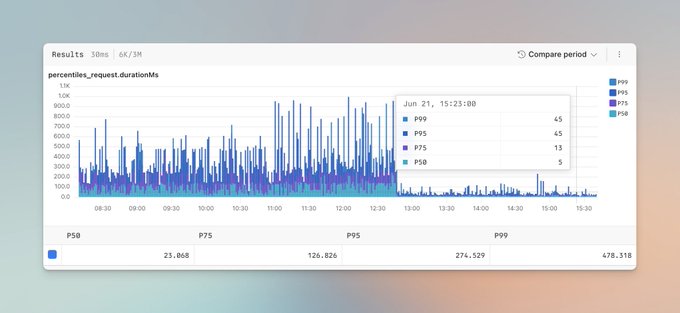

edge workers → fluid functions 489ms → 45ms at p99 bespoke → nodejs dub is doing 360M+ requests per month

TFW you activate @vercel fluid compute for one of your most active API endpoints and your p99 goes off a cliff. 478ms → 45ms = over 10x latency reduction 🤯 h/t @AxiomFM for the o11y logs – been using them since day 1 @dubdotco